Part 3 of our AI Security Blog Series

In our previous two blog posts What is Artificial Intelligence, Anyway, and Defining Machine Learning, Deep Learning & Neural Networks, we gave in-depth descriptions that explain what artificial intelligence is, how machine learning, deep learning, and neural networks fit in, and real-world examples of how the technology is used today.

In this post, we’ll explore why artificial intelligence should be used along with human intelligence — not instead of it. We’ll discuss some of the ethical questions surrounding the use of artificial intelligence, look at more practical examples of artificial intelligence in today’s world, and finally, how to apply AI to your security plans to help keep your employees, students, teachers, and the general public safer.

Why AI is Stronger with Human Intelligence

In sci-fi movies like The Matrix, Terminator, 2001: A Space Odyssey (and the list goes on), humanity has reached a dystopian point where we’re either reliant on self-aware machines to a fault, or worse – we’re slaves to them.

The question of whether or not machines will replace humans entirely isn’t unfounded. You don’t have to look far to see that, historically, plenty of jobs that required human labor in industries like farming and mass production have slowly been replaced by machines that are more efficient than human workers.

These reactive machines are a far cry from what humans are capable of through our mental labor, but smart machines are already being used in more complex sectors like recruitment and the financial industry.

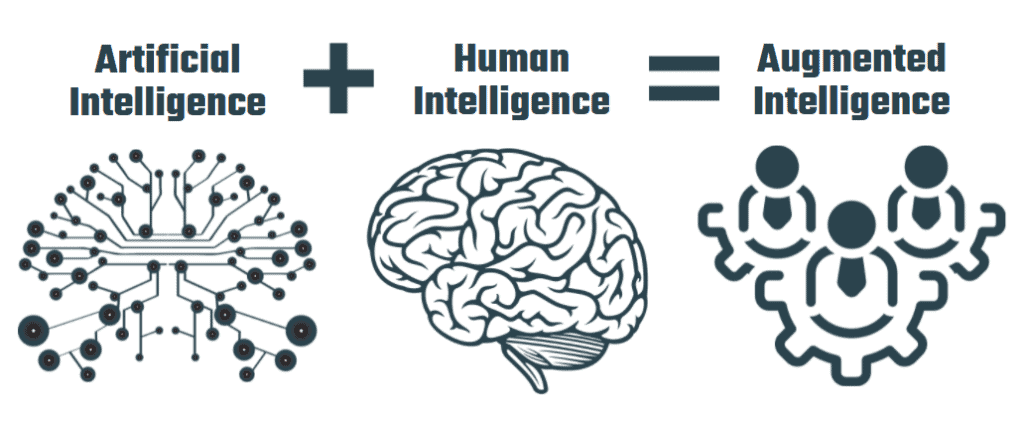

“Will smart machines really replace human workers? Probably not. People and AI both bring different abilities and strengths to the table. The real question is: how can human intelligence work with artificial intelligence to produce augmented intelligence.”

– David De Cremer and Garry Kasparov

What we have to remember is that while artificial intelligence has the ability to work unlimited hours without getting tired, work with more accuracy than humans and even learn from experience, humans have far more capabilities than machines in terms of imagination, creativity, judgment, and emotional awareness.

What’s more, AI is only as good as the data we feed it and the models we train it on. There will always be problems that AI encounters that it can’t solve on its own – that’s where human intervention is needed and we create new models to train AI on.

In the Harvard Business Review article AI Should Augment Human Intelligence, Not Replace It, authors David De Cremer and chess master Garry Kasparov argue that in addition to artificial intelligence and human intelligence, we should create a third category that combines the two: augmented intelligence. Augmented intelligence combines the strengths of AI and the judgment of humans in a way that complements each other for faster, more accurate work.

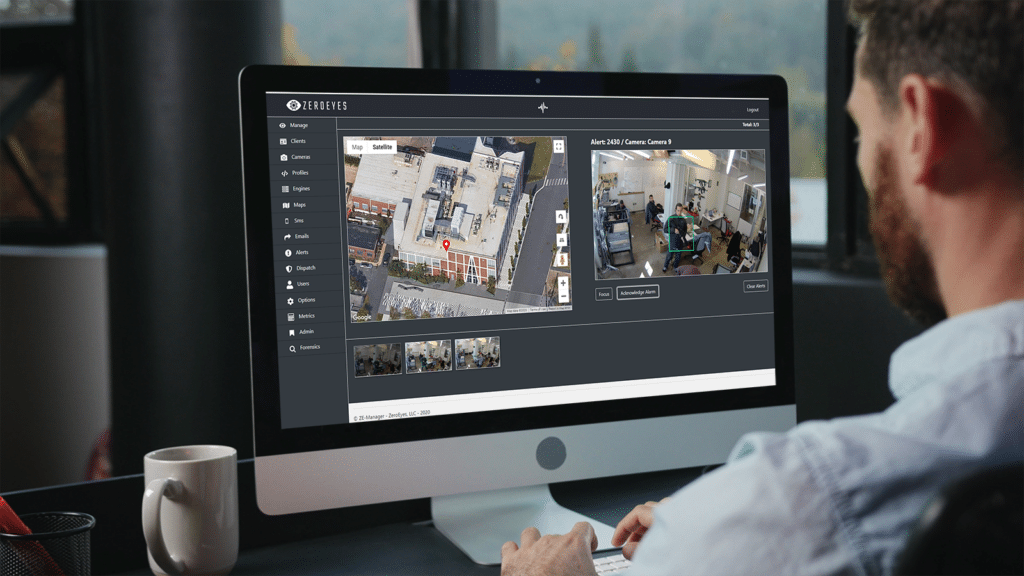

At ZeroEyes, we see the benefits of combining AI with human supervision every day. Our best-in-class AI gun detection software is trained from models of hundreds of thousands of images of weapons to determine if a gun is present within a camera frame.

Because of the very serious nature of our business — to detect visible guns that may indicate the threat of an active shooter — when our DeepZeroTM AI detects a weapon, our military-trained operations team intervenes to verify the AI’s detection. As with all AI, there are “false positives” — instances when the AI detects what it thinks is a threat when no threat is present. When that happens, our team logs the report, and our developers look at the information to create better models.

The Ethics of Artificial Intelligence

There’s an entire branch of ethics dedicated to thinking about the moral repercussions of artificial intelligence. Part of this topic touches on issues that are more “out there,” including how we treat design, construct, use and treat robots (robot ethics), and how we build artificially intelligent machines that behave morally.

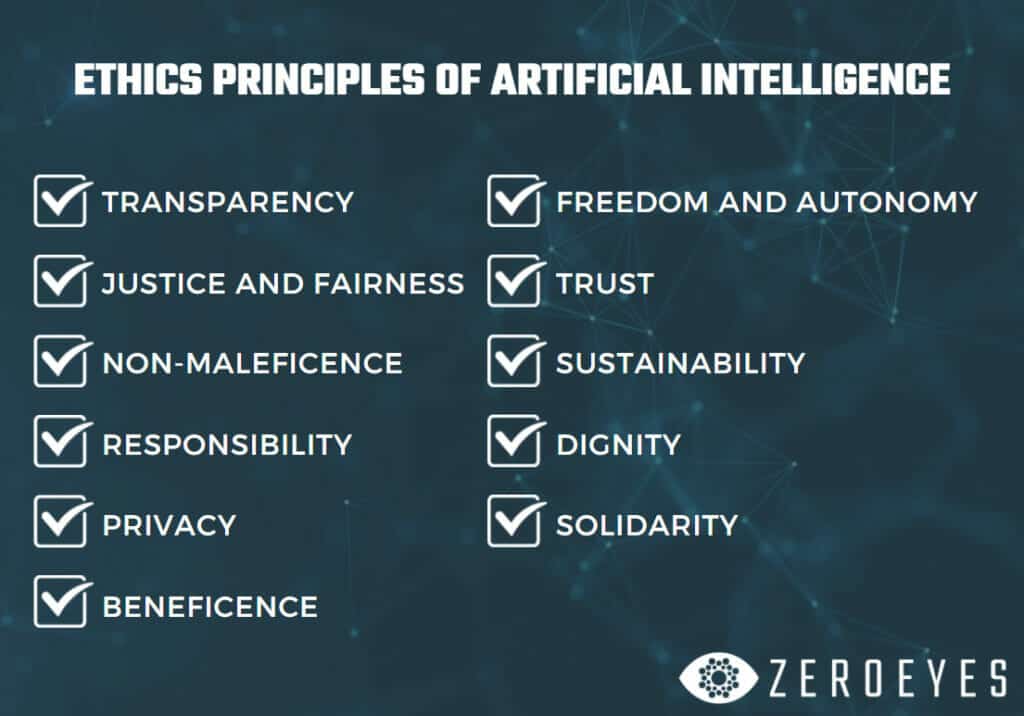

This debate has led to the creation of the “ethics principles of artificial intelligence,” which is essentially a set of guidelines that drive how we as humans design and create AI systems. These principles are transparency, justice and fairness, non-maleficence, responsibility, privacy, beneficence, freedom and autonomy, trust, sustainability, dignity, and solidarity.

But there are real-world ethical challenges that we’re encountering today surrounding AI, including biases in AI systems, privacy concerns, threats to human dignity, liability for self-performing AI (think self-driving cars), and the weaponization of artificial intelligence.

Bias in AI can creep into algorithms in a number of ways and have surprising results. With the increased use of facial and voice recognition AI, for example, algorithms built by Facebook, Microsoft, and IBM all had biases when it came to detecting people’s gender: they were able to more accurately detect the gender of white men over darker skin men.

What’s more, a 2020 study showed that AI voice recognition software had higher error rates when transcribing African Americans’ voices over white people.

Voice and speech recognition AI also opens up a can of worms when it comes to privacy concerns — most people don’t want to be “scanned” without their knowing it, and certainly don’t want their images of voice saved in a database, whether that data is collected legally in public or illegally in private without consent.

Preserving human dignity with AI is another interesting area of AI ethics that’s related to what we talked about earlier. This point is basically saying that we shouldn’t have AI replace humans when it comes to positions that require respect and care, including doctors, therapists, judges, and law enforcement.

Liability for self-driving cars has already become a hot-button issue: if a self-driving car malfunctions and causes a crash, who’s responsible — the driver, the car manufacturer or someone else?

Finally, the weaponization of AI refers to the use of robots in military combat. In October of 2019, the U.S. Department of Defense published a report that recommended principles for the ethical use of artificial intelligence by the DoD. The report ensured that a human operator would always be able to look into the ‘black box’ and understand the kill-chain process for machines used for both combat and non-combat purposes.

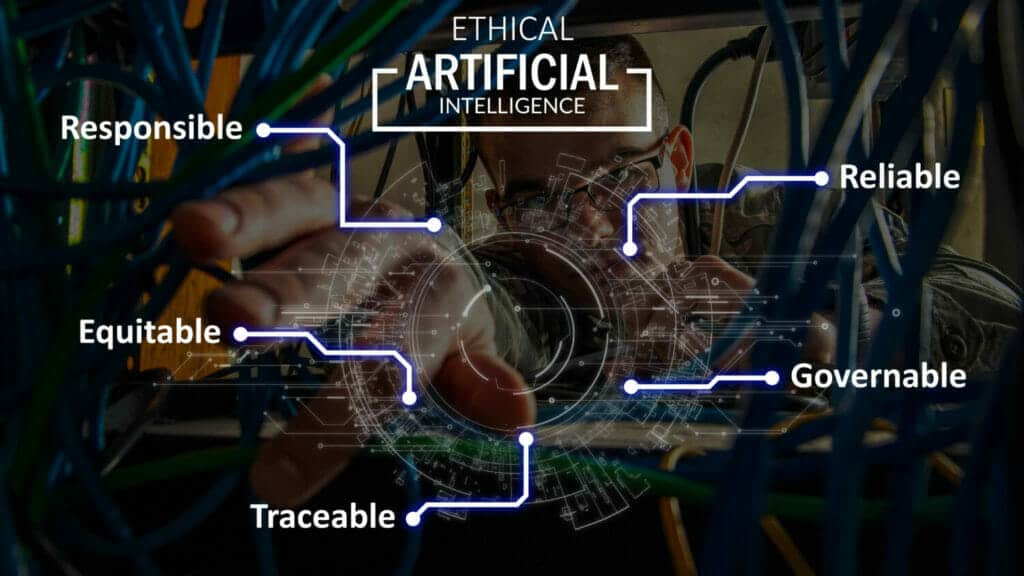

Despite the ethical challenges, the DoD still maintains that investment in the rapidly evolving area of AI development is the key to maintaining military and economic advantages. The Defense Department’s AI Ethical Principles, adopted in February 2020, maintain that AI used by the military should be Responsible, Equitable, Traceable, Reliable, and Governable.

At ZeroEyes, ethical AI and data approaches are main tenets of our approach to building software. We’re founded by former Navy SEALs with deep knowledge and understanding surrounding the ethics of using AI to solve problems, which is why we’ve developed a system that uses object detection, rather than facial recognition, to detect threats. By focusing on scanning for visible guns, not faces, and because we don’t store any data from video feeds, we eliminate privacy concerns and even possible racial bias that has been associated with some AI. To learn more, read our white paper on the topic: Eliminating Racial Bias in Security and Active Shooter Detection/Prevention AI.

What’s more, members of our ZeroEyes team are also involved in The Data Ethics Consortium, which is a group for companies that address ethical data practices in the national security, law enforcement, and corporate security spaces.