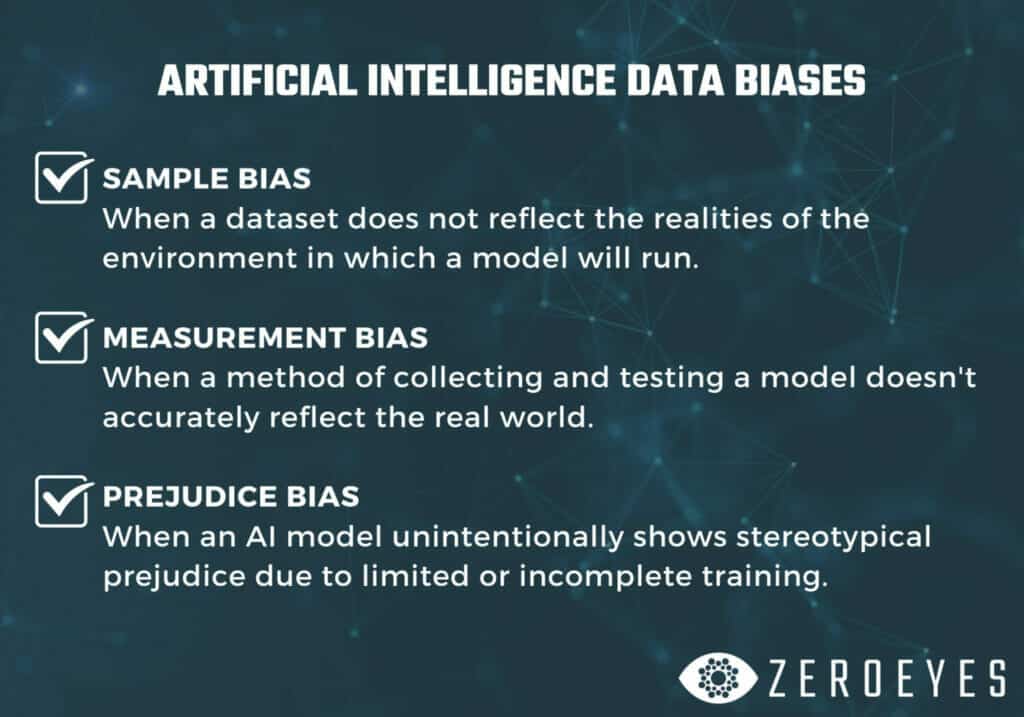

Sample, Measurement, and Prejudice Bias

Can software be biased? We tend to think of machine and deep learning AI as consistent, logical, and unwavering, but surprisingly, that isn’t always the case.

Bias is the source of many AI failures. So why, and how, does bias happen in AI models? The simple answer is that bias exists in these models because they’re created by humans.

Let’s take a look at three types of AI bias that can plague AI models – sample bias, measurement bias, and prejudice bias – and how developers can eliminate these biases with more thorough AI model training.

Sample Bias

Sample bias has to do with the way AI models are trained when they’re developed. This occurs when the data used to train an AI model doesn’t accurately represent the conditions the AI model will operate in.

For example, if the goal of your AI model is to detect objects in both day and nighttime conditions, but the model is only trained with images and video feed taken during the day, there will be sample bias – it can’t accurately operate during the night because it was never taught to, even though that was the intent. Training the model under all the conditions it’s expected to operate in eliminates this bias.

Measurement Bias

Measurement bias happens when only one (or very few) types of measurement are put in place to observe how the AI operates, and the measurement doesn’t reflect what’s happening in the real world.

An example would be when a camera with a filter is used to collect data on object detection: that filter skews what is seen in real life, and the measurements are incorrect. To avoid this, multiple forms of measurement should be used that reflect what’s seen in the real world.

Prejudice Bias

Facial and speech pattern recognition AI that doesn’t take into account all of the variations of the people using it will encounter some prejudice bias, even if that bias is unintentional.

Some real-world examples of prejudice bias have occurred in some of the biggest AI platforms today. Algorithms built by Facebook, Microsoft, and IBM have all had biases when it came to detecting people’s gender: they were able to more accurately detect the gender of white men over darker skin men.

What’s more, a 2020 study showed that AI voice recognition software had higher error rates when transcribing African Americans’ voices over other races.

To prevent prejudice bias in AI, it’s up to developers to recognize where this bias might occur and model the algorithms to eliminate it.

Eight Steps to Eliminate AI Bias

Here are eight steps we at ZeroEyes follow to help avoid biases in our AI gun detection platform, DeepZeroTM:

- We have a defined problem we’re solving

- We structure our data collection to include different perspectives

- We understand our AI model training data

- We seek to assemble a diverse team to ask varying questions

- We think about our end users

- We annotate vast amounts of data

- We test and deploy our AI with feedback in mind

- We have concrete roadmaps to improve our models based on that feedback

Our next blog post, Avoiding Deep Learning AI Bias Part 2: The ZeroEyes Fairness Report, will walk you through ZeroEyes’ in-depth study created to examine any possible biases in our platform, and our methods for eliminating them.